Difference between revisions of "Scaling"

| (29 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

= Introduction = | = Introduction = | ||

| − | Our maximum throughput on a single server (24 cores Xeon, 10Gbit NIC card) is around 60000 calls without RTP analyzing (only SIP) and 10000 concurrent calls with full RTP analyzing and packet capturing to disk (around 1.6Gbit voip traffic). VoIPmonitor can work in distributed mode where remote sniffers writes to one central database having one GUI accessing all data from all sensors. | + | Our maximum throughput on a single server (24 cores Xeon, 10Gbit NIC card) is around 60000 simultaneous calls without RTP analyzing (only SIP) and 10000 concurrent calls with full RTP analyzing and packet capturing to disk (around 1.6Gbit voip traffic). VoIPmonitor can work in distributed mode where remote sniffers writes to one central database having one GUI accessing all data from all sensors. |

VoIPmonitor is able to use all available CPU cores but there are several bottlenecks which you should consider before deploying and configuring VoIPmonitor (do not hesitate to write email to support@voipmonitor.org if you need more info / help with deploying) | VoIPmonitor is able to use all available CPU cores but there are several bottlenecks which you should consider before deploying and configuring VoIPmonitor (do not hesitate to write email to support@voipmonitor.org if you need more info / help with deploying) | ||

| − | There are three types of bottlenecks - CPU, disk I/O throughput (if | + | There are three types of bottlenecks - CPU, disk I/O throughput (if storing SIP/RTP packets are enabled) and storing CDR to MySQL (which is both I/O and CPU). Since version 11.0 all CPU intensive tasks was split to threads but one bottleneck still remains and that is sniffing packets from kernel which cannot be split to more threads. This is the top most consuming thread and it depends on CPU type and kernel version (and number of packets per second). Below 500Mbit of traffic you do not need to be worried about CPU on usual CPU (Xeon, i5). |

| − | I/O | + | Since voipmonitor version 11 the most problematic bottleneck - I/O throughput was solved by changing write strategy. Instead of writing pcap file for each call they are grouped into year-mon-day-hour-minute.tar files where the minute is start of the call. For example 2000 concurrent calls with enabled RTP+SIP+GRAPH disk IOPS lowered from 250 to 40. Another example on server with 60 000 concurrent calls enabling only SIP packets writing IOPS lowered from 4000 to 10 (yes it is not mistake). Usually single SATA disk with 7.5krpm has only 300 IOPS throughput. |

= CPU bound = | = CPU bound = | ||

| Line 20: | Line 20: | ||

*Commercial ntop.org drivers for intel cards which offloads CPU from 90% to 20-40% for 1.5Gbit (tested) | *Commercial ntop.org drivers for intel cards which offloads CPU from 90% to 20-40% for 1.5Gbit (tested) | ||

| + | Recent sniffer versions with kernel >3.2 is able to sniff up to 2Gbit voip traffic on 10Gbit intel card with native intel drivers. The CPU configuration is Intel(R) Xeon(R) CPU E5-2650 v2 @ 2.60GHz. | ||

| − | + | There is also important thing to check for high throughput (>500Mbit) especially if you use kernel < 3.X which do not balance IRQ from RX NIC interrupts by default. You need to check /proc/interrupts to see if your RX queues are not bound only to CPU0 in case you see in syslog that CPU0 is on 100%. If you are not sure just upgrade your kernel to 3.X and the IRQ balance is by default spread to more CPU automatically. | |

| − | + | Another consideration is limit number of rx tx queues on your nic card which is by default number of cores in the system which adds a lot of overhead causing more CPU cycles. six cores are sufficient with up to 2Gbit traffic on 10Gbit intel card. This is how you can limit it: | |

| − | + | modprobe ixgbe DCA=6,6 RSS=6,6 | |

| − | modprobe ixgbe DCA= | + | If you want to make it by default create file /etc/modprobe.d/ixgbe.conf |

| + | options ixgbe DCA=6,6 RSS=6,6 | ||

The next important thing is to set ring buffer in the hardware for RX to its maximum value. You can get what you can set at maximum by running | The next important thing is to set ring buffer in the hardware for RX to its maximum value. You can get what you can set at maximum by running | ||

| Line 39: | Line 41: | ||

This will prevent to miss packets when interrupts spike occurs | This will prevent to miss packets when interrupts spike occurs | ||

| − | + | Tweak coalesce (hw interrupts) | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | ethtool -C eth3 rx-usecs 1022 | |

| − | + | (put it all togather into /etc/network/interfaces) | |

| + | auto eth3 | ||

| + | iface eth3 inet manual | ||

| + | up ip address add 0/0 dev $IFACE | ||

| + | up ip link set $IFACE up | ||

| + | up ip link set $IFACE promisc on | ||

| + | up ethtool -A $IFACE autoneg off rx off tx off 2>&1 > /dev/null || true | ||

| + | up ethtool -C $IFACE rx-usecs 1022 2>&1 > /dev/null || true | ||

| + | up ethtool -G $IFACE rx 16384 2>&1 > /dev/null || true | ||

| − | |||

| + | On following picture you can see how packets are processed from ethernet card to Kernel space to ethernet driver which queues packets to ring buffer. Ring buffer (available since kernel 2.6.32 and libpcap > 1.0) is packet queue waiting to be fetched by the VoIPmonitor sniffer. This ringbuffer prevents packet loss in case the VoIPmonitor process does not have enough CPU cycles from kernel planner. Once the ringbuffer is filled it is logged to syslog that the sniffer loosed some packets from the ringbuffer. In this case you can increase the ringbuffer from the default 50MB to its maximum value of 2000MB. | ||

| − | |||

| + | From the kernel ringbuffer voipmonitor is storing packets to its internal cache memory (heap) which you can control with packetbuffer_total_maxheap parameter. Default value is 200MB. This cache is also compressed by very fast snappy compression algorithm which allows to store more packets in the cache (about 50% ratio for G711 calls). Packets from the cache heap is sent to processing threads which analyzes SIP and RTP. From there packets are destroyed or continues to the write queue. | ||

| − | + | If voipmonitor sniffer is running with at least "-v 1" you can watch several metrics: | |

tail -f /var/log/syslog (on debian/ubuntu) | tail -f /var/log/syslog (on debian/ubuntu) | ||

tail -f /var/log/messages (on redhat/centos) | tail -f /var/log/messages (on redhat/centos) | ||

| − | voipmonitor[15567]: calls[315][355] PS[C:4 S:29/29 R:6354 A:6484] SQLq[C:0 M:0 Cl:0] heap[0|0|0] comp[54] [12.6Mb/s] t0CPU[5.2%] t1CPU[1.2%] t2CPU[0.9%] tacCPU[4.6|3.0|3.7|4.5%] RSS/VSZ[323|752]MB | + | voipmonitor[15567]: calls[315][355] PS[C:4 S:29/29 R:6354 A:6484] SQLq[C:0 M:0 Cl:0] heap[0|0|0] comp[54] [12.6Mb/s] tarQ[1865] tarCPU[12.0|9.2|3.4|18.4%] t0CPU[5.2%] t1CPU[1.2%] t2CPU[0.9%] tacCPU[4.6|3.0|3.7|4.5%] RSS/VSZ[323|752]MB |

*voipmonitor[15567] - 15567 is PID of the process | *voipmonitor[15567] - 15567 is PID of the process | ||

| Line 73: | Line 77: | ||

*comp - compression buffer ratio (if enabled) | *comp - compression buffer ratio (if enabled) | ||

*[12.6Mb/s] - total network throughput | *[12.6Mb/s] - total network throughput | ||

| + | *tarQ[1865] - number of opened files when tar=yes enabled which is default option for sniffer >11 | ||

| + | *tarCPU[12.0|9.2|3.4|18.4%] - CPU utilization when compressing tar which is enabled by default. Maximum thread is controlled by option tar_maxthreads which is 4 by default | ||

*t0CPU - This is %CPU utilization for thread 0. Thread 0 is process reading from kernel ring buffer. Once it is over 90% it means that the current setup is hitting limit processing packets from network card. Please write to support@voipmonitor.org if you hit this limit. | *t0CPU - This is %CPU utilization for thread 0. Thread 0 is process reading from kernel ring buffer. Once it is over 90% it means that the current setup is hitting limit processing packets from network card. Please write to support@voipmonitor.org if you hit this limit. | ||

*t1CPU - This is %CPU utilization for thread 1. Thread 1 is process reading packets from thread 0, adding it to the buffer and compress it (if enabled). | *t1CPU - This is %CPU utilization for thread 1. Thread 1 is process reading packets from thread 0, adding it to the buffer and compress it (if enabled). | ||

| − | *t2CPU - | + | *t2CPU - on start there is only one thread (pb) - if it will be >50% new 3 threads spawn (hash control thread rm, hash computation rh, thread moving packets to rtp threads rd. If pb > 50% new thread d is created. If d>50% new sip preprocess thread (s) is created. If s thread >50% new extended (e) thread is created (searching and creating Call structure) |

| − | *tacCPU[N0|N1|N...] - %CPU utilization when compressing pcap files | + | *tacCPU[N0|N1|N...] - %CPU utilization when compressing pcap files or when compressing internal memory if tar=yes (which is by default) number of threads grows automatically |

*RSS/VSZ[323|752]MB - RSS stands for the resident size, which is an accurate representation of how much actual physical memory sniffer is consuming. VSZ stands for the virtual size of a process, which is the sum of memory it is actually using, memory it has mapped into itself (for instance the video card’s RAM for the X server), files on disk that have been mapped into it (most notably shared libraries), and memory shared with other processes. VIRT represents how much memory the program is able to access at the present moment. | *RSS/VSZ[323|752]MB - RSS stands for the resident size, which is an accurate representation of how much actual physical memory sniffer is consuming. VSZ stands for the virtual size of a process, which is the sum of memory it is actually using, memory it has mapped into itself (for instance the video card’s RAM for the X server), files on disk that have been mapped into it (most notably shared libraries), and memory shared with other processes. VIRT represents how much memory the program is able to access at the present moment. | ||

| + | *more about [[Logging]] | ||

[[File:kernelstandarddiagram.png]] | [[File:kernelstandarddiagram.png]] | ||

| Line 95: | Line 102: | ||

=== Hardware NIC cards === | === Hardware NIC cards === | ||

| − | We have successfully tested 1Gbit and 10Gbit cards from Napatech which delivers packets to VoIPmonitor at <3% CPU. | + | We have successfully tested 1Gbit and 10Gbit cards from Napatech which delivers packets to VoIPmonitor at <3% CPU. |

| − | |||

= I/O bottleneck = | = I/O bottleneck = | ||

| − | + | Since sniffer version 11.0 number of IOPS (overall random writes) lowered by factor 10 which means that the I/O bottleneck is not a problem anymore. 2000 simultaneous calls takes around 40 IOPS which is 10MB / sec which can handle almost any storage. But still the next section is good reading: | |

| − | |||

| − | |||

| − | |||

| − | + | == filesystem == | |

| − | |||

The fastest filesystem for voipmonitor spool directory is EXT4 with following tweaks. Assuming your partition is /dev/sda2: | The fastest filesystem for voipmonitor spool directory is EXT4 with following tweaks. Assuming your partition is /dev/sda2: | ||

| Line 118: | Line 120: | ||

/dev/sda2 /var/spool/voipmonitor ext4 errors=remount-ro,noatime,nodiratime,data=writeback,barrier=0 0 0 | /dev/sda2 /var/spool/voipmonitor ext4 errors=remount-ro,noatime,nodiratime,data=writeback,barrier=0 0 0 | ||

| − | + | == LSI write back cache policy == | |

| − | + | On many installations a raid controller is in not optimally configured. To check what is your cache policy run: | |

| − | + | rpm -Uhv http://dl.marmotte.net/rpms/redhat/el6/x86_64/megacli-8.00.46-2/megacli-8.00.46-2.x86_64.rpm | |

| − | + | #Debian/Ubuntu - you can use following repository for package megacli installation. https://hwraid.le-vert.net/wiki/DebianPackages | |

megacli -LDGetProp -Cache -L0 -a0 | megacli -LDGetProp -Cache -L0 -a0 | ||

Adapter 0-VD 0(target id: 0): Cache Policy:WriteThrough, ReadAheadNone, Direct, No Write Cache if bad BBU | Adapter 0-VD 0(target id: 0): Cache Policy:WriteThrough, ReadAheadNone, Direct, No Write Cache if bad BBU | ||

| Line 139: | Line 141: | ||

And then set the write back cache again | And then set the write back cache again | ||

megacli -LDSetProp -WB -L0 -a0 | megacli -LDSetProp -WB -L0 -a0 | ||

| − | Please note that this example assumes you have one logical drive if you have more you need to repeat it for all of your virtual disks. | + | Please note that this example assumes you have one logical drive if you have more you need to repeat it for all of your virtual disks. |

| + | |||

| + | == HP SMART ARRAY == | ||

| + | |||

| + | === Centos === | ||

| + | ==== controller class <9 ==== | ||

| + | |||

| + | wget ftp://ftp.hp.com/pub/softlib2/software1/pubsw-linux/p1257348637/v71527/hpacucli-9.10-22.0.x86_64.rpm | ||

| + | yum install hpacucli-9.10-22.0.x86_64.rpm | ||

| + | |||

| + | See status | ||

| + | |||

| + | hpacucli ctrl slot=0 show | ||

| + | |||

| + | Enable cache | ||

| + | |||

| + | hpacucli ctrl slot=0 ld all modify arrayaccelerator=enable hpacucli ctrl slot=0 modify dwc=enable | ||

| + | |||

| + | Modify cache ratio between read and write: | ||

| + | hpacucli ctrl slot=0 modify cacheratio=50/50 | ||

| + | |||

| + | ==== controller class >=9 ==== | ||

| + | Find package for download on this site: | ||

| + | https://downloads.linux.hpe.com/sdr/repo/mcp/centos/7/x86_64/current/ | ||

| + | |||

| + | Download and install | ||

| + | wget https://downloads.linux.hpe.com/sdr/repo/mcp/centos/7/x86_64/current/ssacli-5.10-44.0.x86_64.rpm | ||

| + | rpm -i ssacli-5.10-44.0.x86_64.rpm | ||

| + | |||

| + | Check the config cache related | ||

| + | ssacli ctrl slot=0 show config detail|grep ache | ||

| + | |||

| + | Read state, Enable write cache and set cache ratio for write | ||

| + | ssacli ctrl slot=0 modify dwc=? | ||

| + | ssacli ctrl slot=0 modify dwc=enable forced | ||

| + | ssacli controller slot=0 modify cacheratio=0/100 | ||

| + | |||

| + | Make sure that cache is enabled also when battery failure (not installed) | ||

| + | ssacli ctrl slot=0 modify nobatterywritecache=? | ||

| + | ssacli ctrl slot=0 modify nobatterywritecache=enable | ||

| + | |||

| + | |||

| + | |||

| + | === Ubuntu 18.04 === | ||

| + | (Controller class 9 and above) | ||

| + | |||

| + | add to sources.list and install ssacli | ||

| + | deb http://downloads.linux.hpe.com/SDR/downloads/MCP/ubuntu xenial/current non-free | ||

| + | |||

| + | apt update | ||

| + | apt install ssacli | ||

| + | |||

| + | Check the config cache related | ||

| + | ssacli ctrl slot=0 show config detail|grep ache | ||

| + | |||

| + | Read state, Enable write cache and set cache ratio for write | ||

| + | ssacli ctrl slot=0 modify dwc=? | ||

| + | ssacli ctrl slot=0 modify dwc=enable forced | ||

| + | ssacli controller slot=0 modify cacheratio=0/100 | ||

| + | |||

| + | Make sure that cache is enabled also when battery failure (not installed) | ||

| + | ssacli ctrl slot=0 modify nobatterywritecache=? | ||

| + | ssacli ctrl slot=0 modify nobatterywritecache=enable | ||

| + | |||

| + | == DELL PERC class v8 and newer== | ||

| + | Dell's perccli binary is used instead of megacli for perc class 8 and newer | ||

| + | |||

| + | Reading status | ||

| + | |||

| + | ./perccli64 /c0 show all | ||

| + | |||

| + | Changing mode to writeback | ||

| + | |||

| + | perccli /c0/v0 set wrcache=wb | ||

| + | |||

| + | Changing mode to writethru (for SSDs) | ||

| + | |||

| + | perccli /c0/v0 set wrcache=wt | ||

= MySQL performance = | = MySQL performance = | ||

| + | |||

| + | Before you create the database make sure that you either run | ||

| + | |||

| + | MySQL>SET GLOBAL innodb_file_per_table=1; | ||

| + | |||

| + | or set in my.cnf file in global section SET innodb_file_per_table = 1 | ||

| + | |||

| + | this will prevent /var/lib/mysql/ibdata1 file grow to giant size and instead data are organized in /var/lib/mysql/voipmonitor which greatly increases I/O performance. | ||

== Write performance == | == Write performance == | ||

| − | Write performance depends a lot if a storage is also used for pcap storing (thus sharing I/O with voipmonitor) and on how mysql handles writes (innodb_flush_log_at_trx_commit parameter - see below). Since sniffer version 6 MySQL tables uses compression which doubles write and read performance almost with no trade cost on CPU (well it depends on CPU type and | + | Write performance depends a lot if a storage is also used for pcap storing (thus sharing I/O with voipmonitor) and on how mysql handles writes (innodb_flush_log_at_trx_commit parameter - see below). Since sniffer version 6 MySQL tables uses compression which doubles write and read performance almost with no trade cost on CPU (well it depends on CPU type and amount of traffic). |

=== innodb_flush_log_at_trx_commit === | === innodb_flush_log_at_trx_commit === | ||

| Line 155: | Line 242: | ||

=== compression === | === compression === | ||

| + | |||

| + | The compression is enabled by default when you use mysql >=5.5 | ||

==== MySQL 5.1 ==== | ==== MySQL 5.1 ==== | ||

| Line 162: | Line 251: | ||

innodb_file_per_table = 1 | innodb_file_per_table = 1 | ||

| − | ==== MySQL | + | ==== MySQL 5.5, 5.6, 5.7 ==== |

| + | |||

| + | innodb_file_per_table = 1 | ||

| + | innodb_file_format = barracuda | ||

| + | |||

| + | ==== MySQL 8.0 ==== | ||

| + | innodb_file_per_table = 1 | ||

| − | |||

| − | |||

==== Tune KEY_BLOCK_SIZE ==== | ==== Tune KEY_BLOCK_SIZE ==== | ||

| − | If you choose KEY_BLOCK_SIZE=2 instead of 8 the compression will be twice better but with CPU penalty on read. We have tested differences between no compression, 8kb and 2kb block size compression on 700 000 CDR with this result (on single core system – we do not know how it behaves on multi core systems). Testing query is select with group by. | + | If you choose KEY_BLOCK_SIZE=2 instead of default 8 the compression will be twice better but with CPU penalty on read. We have tested differences between no compression, 8kb and 2kb block size compression on 700 000 CDR with this result (on single core system – we do not know how it behaves on multi core systems). Testing query is select with group by. |

No compression – 1.6 seconds | No compression – 1.6 seconds | ||

8kb - 1.7 seconds | 8kb - 1.7 seconds | ||

| Line 191: | Line 284: | ||

Partitioning is enabled by default since version 7. If you want to take benefit of it (which we strongly recommend) you need to start with clean database - there is no conversion procedure from old database to partitioned one. Just create new database and start voipmonitor with new database and partitioning will be created. You can turn off partitioning by setting cdr_partition = no in voipmonitor.conf | Partitioning is enabled by default since version 7. If you want to take benefit of it (which we strongly recommend) you need to start with clean database - there is no conversion procedure from old database to partitioned one. Just create new database and start voipmonitor with new database and partitioning will be created. You can turn off partitioning by setting cdr_partition = no in voipmonitor.conf | ||

| + | == SSDs == | ||

| + | When there are used SSDs (partitions with fast access time) for database's datadir, make sure that mysql options are not the limiting. Optimal settings of following options is essential. | ||

| + | (Example is for mysql8.0 server with 256GB of RAM and SSDs fs mounted with options (rw,noatime,nodiratime,nobarrier,errors=remount-ro,stripe=1024,data=writeback): | ||

| + | innodb_flush_log_at_trx_commit=0 | ||

| + | innodb_flush_log_at_timeout = 1800 | ||

| + | innodb_flush_neighbors = 0 | ||

| + | innodb_io_capacity = 1000000 | ||

| + | innodb_io_capacity_max = 10000000 | ||

| + | innodb_doublewrite=0 | ||

| + | innodb_flush_method = O_DIRECT | ||

| + | innodb_read_io_threads = 20 | ||

| + | innodb_write_io_threads = 20 | ||

| + | innodb_purge_threads = 20 | ||

| + | innodb_thread_concurrency = 40 | ||

| + | transaction-isolation = READ-UNCOMMITTED | ||

| + | open_files_limit = 200000 | ||

| + | skip-external-locking | ||

| + | skip-name-resolve | ||

| + | performance_schema=0 | ||

| + | |||

| + | sort_buffer_size = 65M | ||

| + | max_heap_table_size = 24G | ||

| + | innodb_log_file_size = 5G | ||

| + | innodb_log_buffer_size = 2G | ||

| + | innodb_buffer_pool_size = 180G | ||

| + | == High calls per second configuration (>= 15000 CPS) == | ||

| + | [mysqld] | ||

| + | default-authentication-plugin=mysql_native_password | ||

| + | skip-log-bin | ||

| + | symbolic-links=0 | ||

| + | innodb_flush_log_at_trx_commit=0 | ||

| + | innodb_flush_log_at_timeout = 1800 | ||

| + | max_heap_table_size = 24G | ||

| + | innodb_log_file_size = 5G | ||

| + | innodb_log_buffer_size = 2G | ||

| + | innodb_file_per_table = 1 | ||

| + | open_files_limit = 200000 | ||

| + | skip-external-locking | ||

| + | key_buffer_size = 2G | ||

| + | sort_buffer_size = 65M | ||

| + | max_connections = 100000 | ||

| + | max_connect_errors = 1000 | ||

| + | skip-name-resolve | ||

| + | |||

| + | innodb_read_io_threads = 20 | ||

| + | innodb_write_io_threads = 20 | ||

| + | innodb_purge_threads = 20 | ||

| + | innodb_thread_concurrency = 40 | ||

| + | innodb_flush_neighbors = 0 | ||

| + | innodb_io_capacity = 1000000 | ||

| + | innodb_io_capacity_max = 10000000 | ||

| + | innodb_doublewrite = 0 | ||

| + | innodb_buffer_pool_size = 150G | ||

| + | innodb_flush_method = O_DIRECT | ||

| + | innodb_page_cleaners = 15 | ||

| + | innodb_buffer_pool_instances = 15 | ||

| + | |||

| + | log_timestamps = SYSTEM | ||

| + | |||

| + | transaction-isolation = READ-UNCOMMITTED | ||

| + | performance_schema=0 | ||

| + | ==== server voipmonitor.conf ==== | ||

| + | all sensors MUST be configured to connect to one central voipmonitor sniffer which connects to mysql - clients does not send SQL to DB - only central server communicates to mysql server \ | ||

| + | client sniffers does not need to mirror packets to central voipmonitor sniffer - it connects only for sending CDR for central batch database inserts | ||

| + | id_sensor = 1 | ||

| + | |||

| + | mysql_enable_new_store = per_query | ||

| + | mysql_enable_set_id = yes | ||

| + | server_sql_queue_limit = 50000 // clients will not send its queue when limit is reached | ||

| + | mysqlstore_max_threads_cdr = 9 // maximum 99 threads | ||

| + | |||

| + | server_type_compress = lzo | ||

| + | disable_cdr_indexes_rtp = yes // this will apply only when CDR tables are being created - drop cdr* tables when applying this changes | ||

| + | #check if mysql supports lz4 : SHOW GLOBAL STATUS WHERE Variable_name IN ( 'Innodb_have_lz4'); | ||

| + | #based on mysql / mariadb set one of the mysqlcompress_type: | ||

| + | #for mysql8: | ||

| + | mysqlcompress_type = compression="lz4" | ||

| + | #for mariadb: | ||

| + | mysqlcompress_type = PAGE_COMPRESSED=1 | ||

| + | server_bind = 0.0.0.0 | ||

| + | server_bind_port = 55551 | ||

| + | server_password = yourpassword | ||

| + | |||

| + | query_cache = yes | ||

| + | ==== clients voipmonitor.conf ==== | ||

| − | + | id_sensor = 2 | |

| − | + | query_cache = yes | |

| − | + | ||

| − | + | server_destination = 192.168.0.1 | |

| − | + | server_destination_port = 55551 | |

| − | + | server_password = yourpassword | |

| − | + | mysqlstore_max_threads_cdr = 9 // maximum 99 | |

Revision as of 15:18, 12 October 2021

Introduction

Our maximum throughput on a single server (24 cores Xeon, 10Gbit NIC card) is around 60000 simultaneous calls without RTP analyzing (only SIP) and 10000 concurrent calls with full RTP analyzing and packet capturing to disk (around 1.6Gbit voip traffic). VoIPmonitor can work in distributed mode where remote sniffers writes to one central database having one GUI accessing all data from all sensors.

VoIPmonitor is able to use all available CPU cores but there are several bottlenecks which you should consider before deploying and configuring VoIPmonitor (do not hesitate to write email to support@voipmonitor.org if you need more info / help with deploying)

There are three types of bottlenecks - CPU, disk I/O throughput (if storing SIP/RTP packets are enabled) and storing CDR to MySQL (which is both I/O and CPU). Since version 11.0 all CPU intensive tasks was split to threads but one bottleneck still remains and that is sniffing packets from kernel which cannot be split to more threads. This is the top most consuming thread and it depends on CPU type and kernel version (and number of packets per second). Below 500Mbit of traffic you do not need to be worried about CPU on usual CPU (Xeon, i5).

Since voipmonitor version 11 the most problematic bottleneck - I/O throughput was solved by changing write strategy. Instead of writing pcap file for each call they are grouped into year-mon-day-hour-minute.tar files where the minute is start of the call. For example 2000 concurrent calls with enabled RTP+SIP+GRAPH disk IOPS lowered from 250 to 40. Another example on server with 60 000 concurrent calls enabling only SIP packets writing IOPS lowered from 4000 to 10 (yes it is not mistake). Usually single SATA disk with 7.5krpm has only 300 IOPS throughput.

CPU bound

Reading packets

Main thread (called t0CPU) which reads packets from kernel cannot be split into more threads which limits number of concurrent calls for the whole server. You can check how much CPU is spent in T0 thread looking in the syslog where voipmonitor sends each 10 seconds information about CPU and memory. If the t0CPU is >95% you are at the limit of capturing packets from the interface. Your options are:

- better CPU (faster core)

- kernel >= 3.2 (out latest static binaries supports TPACKET_V3 feature which speedups capturing and reducing t0CPU

- special network card (Napatech for example reduces t0CPU to 3% for 1.6Gbit voip traffic)

- Commercial ntop.org drivers for intel cards which offloads CPU from 90% to 20-40% for 1.5Gbit (tested)

Recent sniffer versions with kernel >3.2 is able to sniff up to 2Gbit voip traffic on 10Gbit intel card with native intel drivers. The CPU configuration is Intel(R) Xeon(R) CPU E5-2650 v2 @ 2.60GHz.

There is also important thing to check for high throughput (>500Mbit) especially if you use kernel < 3.X which do not balance IRQ from RX NIC interrupts by default. You need to check /proc/interrupts to see if your RX queues are not bound only to CPU0 in case you see in syslog that CPU0 is on 100%. If you are not sure just upgrade your kernel to 3.X and the IRQ balance is by default spread to more CPU automatically.

Another consideration is limit number of rx tx queues on your nic card which is by default number of cores in the system which adds a lot of overhead causing more CPU cycles. six cores are sufficient with up to 2Gbit traffic on 10Gbit intel card. This is how you can limit it:

modprobe ixgbe DCA=6,6 RSS=6,6

If you want to make it by default create file /etc/modprobe.d/ixgbe.conf

options ixgbe DCA=6,6 RSS=6,6

The next important thing is to set ring buffer in the hardware for RX to its maximum value. You can get what you can set at maximum by running

ethtool -g eth3

For example for Intel 10Gbit card default value is 4092 which can be extended to 16384

ethtool -G eth3 rx 16384

This will prevent to miss packets when interrupts spike occurs

Tweak coalesce (hw interrupts)

ethtool -C eth3 rx-usecs 1022

(put it all togather into /etc/network/interfaces)

auto eth3 iface eth3 inet manual up ip address add 0/0 dev $IFACE up ip link set $IFACE up up ip link set $IFACE promisc on up ethtool -A $IFACE autoneg off rx off tx off 2>&1 > /dev/null || true up ethtool -C $IFACE rx-usecs 1022 2>&1 > /dev/null || true up ethtool -G $IFACE rx 16384 2>&1 > /dev/null || true

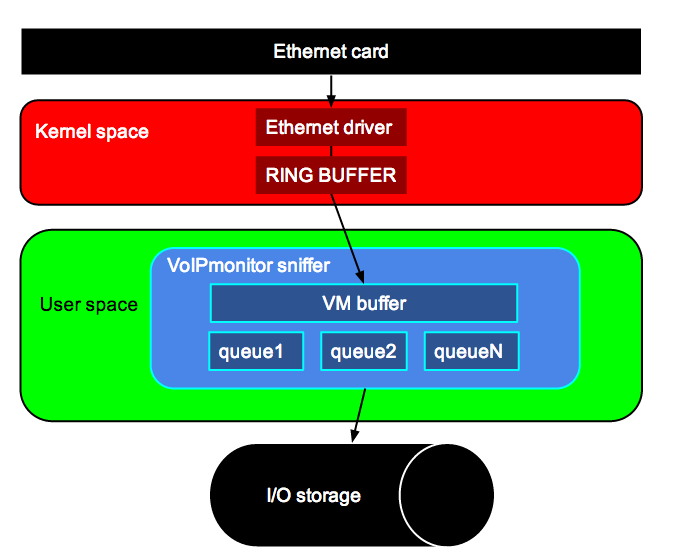

On following picture you can see how packets are processed from ethernet card to Kernel space to ethernet driver which queues packets to ring buffer. Ring buffer (available since kernel 2.6.32 and libpcap > 1.0) is packet queue waiting to be fetched by the VoIPmonitor sniffer. This ringbuffer prevents packet loss in case the VoIPmonitor process does not have enough CPU cycles from kernel planner. Once the ringbuffer is filled it is logged to syslog that the sniffer loosed some packets from the ringbuffer. In this case you can increase the ringbuffer from the default 50MB to its maximum value of 2000MB.

From the kernel ringbuffer voipmonitor is storing packets to its internal cache memory (heap) which you can control with packetbuffer_total_maxheap parameter. Default value is 200MB. This cache is also compressed by very fast snappy compression algorithm which allows to store more packets in the cache (about 50% ratio for G711 calls). Packets from the cache heap is sent to processing threads which analyzes SIP and RTP. From there packets are destroyed or continues to the write queue.

If voipmonitor sniffer is running with at least "-v 1" you can watch several metrics:

tail -f /var/log/syslog (on debian/ubuntu) tail -f /var/log/messages (on redhat/centos)

voipmonitor[15567]: calls[315][355] PS[C:4 S:29/29 R:6354 A:6484] SQLq[C:0 M:0 Cl:0] heap[0|0|0] comp[54] [12.6Mb/s] tarQ[1865] tarCPU[12.0|9.2|3.4|18.4%] t0CPU[5.2%] t1CPU[1.2%] t2CPU[0.9%] tacCPU[4.6|3.0|3.7|4.5%] RSS/VSZ[323|752]MB

- voipmonitor[15567] - 15567 is PID of the process

- calls - [X][Y] - X is actual calls in voipmonitor memory. Y is total calls in voipmonitor memory (actual + queue buffer) including SIP register

- PS - call/packet counters per second. C: number of calls / second, S: X/Y - X is number of valid SIP packets / second on sip ports. Y is number of all packets on sip ports. R: number of RTP packets / second of registered calls by voipmonitor per second. A: all packets per second

- SQLqueue - is number of sql statements (INSERTs) waiting to be written to MySQL. If this number is growing the MySQL is not able to handle it. See Scaling#innodb_flush_log_at_trx_commit

heap[A|B|C] - A: % of used heap memory. If 100 voipmonitor is not able to process packets in realtime due to CPU or I/O. B: number of % used memory in packetbuffer. C: number of % used for async write buffers (if 100% I/O is blocking and heap will grow and than ring buffer will get full and then packet loss will occur)

- hoverruns - if this number grows the heap buffer was completely filled. In this case the primary thread will stop reading packets from ringbuffer and if the ringbuffer is full packets will be lost - this occurrence will be logged to syslog.

- comp - compression buffer ratio (if enabled)

- [12.6Mb/s] - total network throughput

- tarQ[1865] - number of opened files when tar=yes enabled which is default option for sniffer >11

- tarCPU[12.0|9.2|3.4|18.4%] - CPU utilization when compressing tar which is enabled by default. Maximum thread is controlled by option tar_maxthreads which is 4 by default

- t0CPU - This is %CPU utilization for thread 0. Thread 0 is process reading from kernel ring buffer. Once it is over 90% it means that the current setup is hitting limit processing packets from network card. Please write to support@voipmonitor.org if you hit this limit.

- t1CPU - This is %CPU utilization for thread 1. Thread 1 is process reading packets from thread 0, adding it to the buffer and compress it (if enabled).

- t2CPU - on start there is only one thread (pb) - if it will be >50% new 3 threads spawn (hash control thread rm, hash computation rh, thread moving packets to rtp threads rd. If pb > 50% new thread d is created. If d>50% new sip preprocess thread (s) is created. If s thread >50% new extended (e) thread is created (searching and creating Call structure)

- tacCPU[N0|N1|N...] - %CPU utilization when compressing pcap files or when compressing internal memory if tar=yes (which is by default) number of threads grows automatically

- RSS/VSZ[323|752]MB - RSS stands for the resident size, which is an accurate representation of how much actual physical memory sniffer is consuming. VSZ stands for the virtual size of a process, which is the sum of memory it is actually using, memory it has mapped into itself (for instance the video card’s RAM for the X server), files on disk that have been mapped into it (most notably shared libraries), and memory shared with other processes. VIRT represents how much memory the program is able to access at the present moment.

- more about Logging

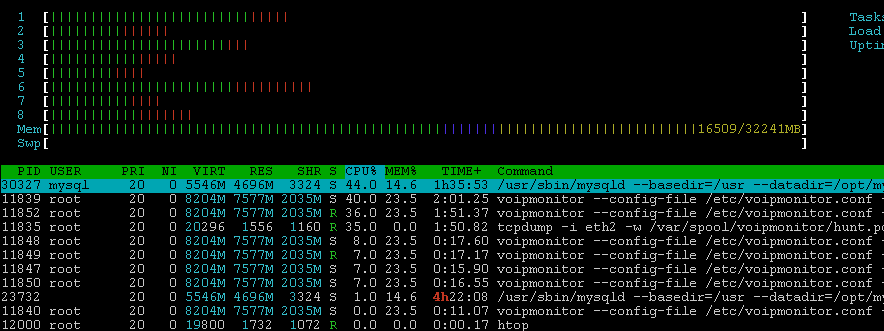

Good tool for measuring CPU is http://htop.sourceforge.net/

Software driver alternatives

- TPACKET_V3 - New libpcap 1.5.3 and >= 3.2 kernel supports TPACKET_V3 which means that you need to compile this libpcap against recent linux kernel. In our tests we are able to sniff on 10Gbit intel card 2Gbit traffic without special drivers - just using the latest libpcap and kernel. Our latest statically compiled binaries (in download section) already includes TPACKET_V3 which means that if you are running kernel >= 3.2 it is used.

- Direct NIC Access http://www.ntop.org/products/pf_ring/dna/ - We have tried DNA driver for stock 1Gbit Intel card which reduces 100% CPU load to 20%.

Hardware NIC cards

We have successfully tested 1Gbit and 10Gbit cards from Napatech which delivers packets to VoIPmonitor at <3% CPU.

I/O bottleneck

Since sniffer version 11.0 number of IOPS (overall random writes) lowered by factor 10 which means that the I/O bottleneck is not a problem anymore. 2000 simultaneous calls takes around 40 IOPS which is 10MB / sec which can handle almost any storage. But still the next section is good reading:

filesystem

The fastest filesystem for voipmonitor spool directory is EXT4 with following tweaks. Assuming your partition is /dev/sda2:

export mydisk=/dev/sda2 mke2fs -t ext4 -O ^has_journal $mydisk tune2fs -O ^has_journal $mydisk tune2fs -o journal_data_writeback $mydisk #add following line to /etc/fstab /dev/sda2 /var/spool/voipmonitor ext4 errors=remount-ro,noatime,nodiratime,data=writeback,barrier=0 0 0

LSI write back cache policy

On many installations a raid controller is in not optimally configured. To check what is your cache policy run:

rpm -Uhv http://dl.marmotte.net/rpms/redhat/el6/x86_64/megacli-8.00.46-2/megacli-8.00.46-2.x86_64.rpm #Debian/Ubuntu - you can use following repository for package megacli installation. https://hwraid.le-vert.net/wiki/DebianPackages megacli -LDGetProp -Cache -L0 -a0 Adapter 0-VD 0(target id: 0): Cache Policy:WriteThrough, ReadAheadNone, Direct, No Write Cache if bad BBU

Cache policy write through has very bad random write performance so you probably want to change it to write back cache policy:

megacli -LDSetProp -WB -L0 -a0 Battery needs replacement So policy Change to WB will not come into effect immediately Set Write Policy to WriteBack on Adapter 0, VD 0 (target id: 0) success

Recheck if the cache was really set to write back if not, you need to force write cache if battery is bad / missing with this command:

megacli -LDSetProp CachedBadBBU -Lall -aAll Set Write Cache OK if bad BBU on Adapter 0, VD 0 (target id: 0) success Set Write Cache OK if bad BBU on Adapter 0, VD 1 (target id: 1) success

And then set the write back cache again

megacli -LDSetProp -WB -L0 -a0

Please note that this example assumes you have one logical drive if you have more you need to repeat it for all of your virtual disks.

HP SMART ARRAY

Centos

controller class <9

wget ftp://ftp.hp.com/pub/softlib2/software1/pubsw-linux/p1257348637/v71527/hpacucli-9.10-22.0.x86_64.rpm yum install hpacucli-9.10-22.0.x86_64.rpm

See status

hpacucli ctrl slot=0 show

Enable cache

hpacucli ctrl slot=0 ld all modify arrayaccelerator=enable hpacucli ctrl slot=0 modify dwc=enable

Modify cache ratio between read and write:

hpacucli ctrl slot=0 modify cacheratio=50/50

controller class >=9

Find package for download on this site:

https://downloads.linux.hpe.com/sdr/repo/mcp/centos/7/x86_64/current/

Download and install

wget https://downloads.linux.hpe.com/sdr/repo/mcp/centos/7/x86_64/current/ssacli-5.10-44.0.x86_64.rpm rpm -i ssacli-5.10-44.0.x86_64.rpm

Check the config cache related

ssacli ctrl slot=0 show config detail|grep ache

Read state, Enable write cache and set cache ratio for write

ssacli ctrl slot=0 modify dwc=? ssacli ctrl slot=0 modify dwc=enable forced ssacli controller slot=0 modify cacheratio=0/100

Make sure that cache is enabled also when battery failure (not installed)

ssacli ctrl slot=0 modify nobatterywritecache=? ssacli ctrl slot=0 modify nobatterywritecache=enable

Ubuntu 18.04

(Controller class 9 and above)

add to sources.list and install ssacli

deb http://downloads.linux.hpe.com/SDR/downloads/MCP/ubuntu xenial/current non-free

apt update apt install ssacli

Check the config cache related

ssacli ctrl slot=0 show config detail|grep ache

Read state, Enable write cache and set cache ratio for write

ssacli ctrl slot=0 modify dwc=? ssacli ctrl slot=0 modify dwc=enable forced ssacli controller slot=0 modify cacheratio=0/100

Make sure that cache is enabled also when battery failure (not installed)

ssacli ctrl slot=0 modify nobatterywritecache=? ssacli ctrl slot=0 modify nobatterywritecache=enable

DELL PERC class v8 and newer

Dell's perccli binary is used instead of megacli for perc class 8 and newer

Reading status

./perccli64 /c0 show all

Changing mode to writeback

perccli /c0/v0 set wrcache=wb

Changing mode to writethru (for SSDs)

perccli /c0/v0 set wrcache=wt

MySQL performance

Before you create the database make sure that you either run

MySQL>SET GLOBAL innodb_file_per_table=1;

or set in my.cnf file in global section SET innodb_file_per_table = 1

this will prevent /var/lib/mysql/ibdata1 file grow to giant size and instead data are organized in /var/lib/mysql/voipmonitor which greatly increases I/O performance.

Write performance

Write performance depends a lot if a storage is also used for pcap storing (thus sharing I/O with voipmonitor) and on how mysql handles writes (innodb_flush_log_at_trx_commit parameter - see below). Since sniffer version 6 MySQL tables uses compression which doubles write and read performance almost with no trade cost on CPU (well it depends on CPU type and amount of traffic).

innodb_flush_log_at_trx_commit

Default value of 1 will mean each update transaction commit (or each statement outside of transaction) will need to flush log to the disk which is rather expensive, especially if you do not have Battery backed up cache. Many applications are OK with value 2 which means do not flush log to the disk but only flush it to OS cache. The log is still flushed to the disk each second so you normally would not loose more than 1-2 sec worth of updates. Value 0 is a bit faster but is a bit less secure as you can lose transactions even in case MySQL Server crashes. Value 2 only cause data loss with full OS crash. If you are importing or altering cdr table it is strongly recommended to set temporarily innodb_flush_log_at_trx_commit = 0 and turn off binlog if you are importing CDR via inserts.

innodb_flush_log_at_trx_commit = 2

compression

The compression is enabled by default when you use mysql >=5.5

MySQL 5.1

set in my.cf in [global] section this value:

innodb_file_per_table = 1

MySQL 5.5, 5.6, 5.7

innodb_file_per_table = 1 innodb_file_format = barracuda

MySQL 8.0

innodb_file_per_table = 1

Tune KEY_BLOCK_SIZE

If you choose KEY_BLOCK_SIZE=2 instead of default 8 the compression will be twice better but with CPU penalty on read. We have tested differences between no compression, 8kb and 2kb block size compression on 700 000 CDR with this result (on single core system – we do not know how it behaves on multi core systems). Testing query is select with group by.

No compression – 1.6 seconds 8kb - 1.7 seconds 4kb - 8 seconds

Read performance

Read performance depends how big the database is and how fast disk operates and how much memory is allocated for innodb cache. Since sniffer version 7 all large tables uses partitioning by days which reduces needs to allocate very large cache to get good performance for the GUI. Partitioning works since MySQL 5.1 and is highly recommended. It also allows instantly removes old data by wiping partition instead of DELETE rows which can take hours on very large tables (millions of rows).

innodb_buffer_pool_size

This is very important variable to tune if you’re using Innodb tables. Innodb tables are much more sensitive to buffer size compared to MyISAM. MyISAM may work kind of OK with default key_buffer_size even with large data set but it will crawl with default innodb_buffer_pool_size. Also Innodb buffer pool caches both data and index pages so you do not need to leave space for OS cache so values up to 70-80% of memory often make sense for Innodb only installations.

We recommend to set this value to 50% of your available RAM. 2GB at least, 8GB is optimal. All depends how many CDR do you have per day.

put into /etc/mysql/my.cnf (or /etc/my.cnf if redhat/centos) [mysqld] section innodb_buffer_pool_size = 8GB

Partitioning

Partitioning is enabled by default since version 7. If you want to take benefit of it (which we strongly recommend) you need to start with clean database - there is no conversion procedure from old database to partitioned one. Just create new database and start voipmonitor with new database and partitioning will be created. You can turn off partitioning by setting cdr_partition = no in voipmonitor.conf

SSDs

When there are used SSDs (partitions with fast access time) for database's datadir, make sure that mysql options are not the limiting. Optimal settings of following options is essential. (Example is for mysql8.0 server with 256GB of RAM and SSDs fs mounted with options (rw,noatime,nodiratime,nobarrier,errors=remount-ro,stripe=1024,data=writeback):

innodb_flush_log_at_trx_commit=0 innodb_flush_log_at_timeout = 1800 innodb_flush_neighbors = 0 innodb_io_capacity = 1000000 innodb_io_capacity_max = 10000000 innodb_doublewrite=0 innodb_flush_method = O_DIRECT innodb_read_io_threads = 20 innodb_write_io_threads = 20 innodb_purge_threads = 20 innodb_thread_concurrency = 40 transaction-isolation = READ-UNCOMMITTED open_files_limit = 200000 skip-external-locking skip-name-resolve performance_schema=0 sort_buffer_size = 65M max_heap_table_size = 24G innodb_log_file_size = 5G innodb_log_buffer_size = 2G innodb_buffer_pool_size = 180G

High calls per second configuration (>= 15000 CPS)

[mysqld] default-authentication-plugin=mysql_native_password skip-log-bin symbolic-links=0 innodb_flush_log_at_trx_commit=0 innodb_flush_log_at_timeout = 1800 max_heap_table_size = 24G innodb_log_file_size = 5G innodb_log_buffer_size = 2G innodb_file_per_table = 1 open_files_limit = 200000 skip-external-locking key_buffer_size = 2G sort_buffer_size = 65M max_connections = 100000 max_connect_errors = 1000 skip-name-resolve innodb_read_io_threads = 20 innodb_write_io_threads = 20 innodb_purge_threads = 20 innodb_thread_concurrency = 40 innodb_flush_neighbors = 0 innodb_io_capacity = 1000000 innodb_io_capacity_max = 10000000 innodb_doublewrite = 0 innodb_buffer_pool_size = 150G innodb_flush_method = O_DIRECT innodb_page_cleaners = 15 innodb_buffer_pool_instances = 15 log_timestamps = SYSTEM transaction-isolation = READ-UNCOMMITTED performance_schema=0

server voipmonitor.conf

all sensors MUST be configured to connect to one central voipmonitor sniffer which connects to mysql - clients does not send SQL to DB - only central server communicates to mysql server \ client sniffers does not need to mirror packets to central voipmonitor sniffer - it connects only for sending CDR for central batch database inserts

id_sensor = 1 mysql_enable_new_store = per_query mysql_enable_set_id = yes

server_sql_queue_limit = 50000 // clients will not send its queue when limit is reached mysqlstore_max_threads_cdr = 9 // maximum 99 threads server_type_compress = lzo

disable_cdr_indexes_rtp = yes // this will apply only when CDR tables are being created - drop cdr* tables when applying this changes #check if mysql supports lz4 : SHOW GLOBAL STATUS WHERE Variable_name IN ( 'Innodb_have_lz4'); #based on mysql / mariadb set one of the mysqlcompress_type: #for mysql8: mysqlcompress_type = compression="lz4" #for mariadb: mysqlcompress_type = PAGE_COMPRESSED=1

server_bind = 0.0.0.0 server_bind_port = 55551 server_password = yourpassword query_cache = yes

clients voipmonitor.conf

id_sensor = 2 query_cache = yes server_destination = 192.168.0.1 server_destination_port = 55551 server_password = yourpassword mysqlstore_max_threads_cdr = 9 // maximum 99

.